Circumvent Facial Recognition With This Hat

There’s a constant push and pull when it comes to new security-related technologies. Researchers will invent a new security system, only to have others find a way of circumventing it, before still others come along and find a way to further strengthen it. That’s a vicious cycle and it certainly applies to facial-recognition software, which is now widely used in everything from airport security to unlocking our iPhone X handsets.

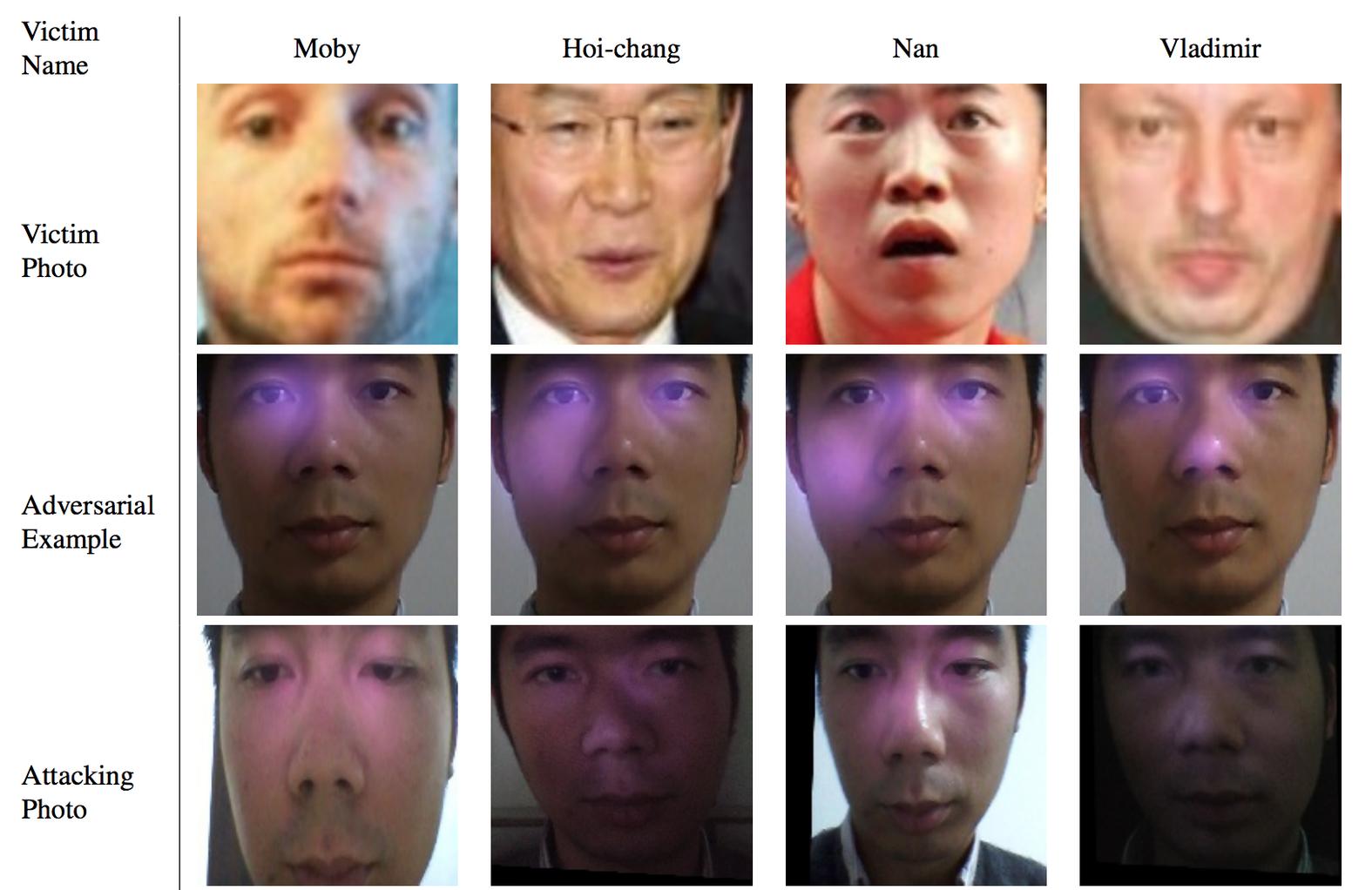

A new project carried out by researchers in China threatens to undermine it, however, through the creation of an LED-studded baseball cap, which is able to trick facial-recognition systems into thinking that you are another person entirely. The smart (but scary) hack involves projecting infrared dots of light onto a person’s face, which are then detected by facial-recognition cameras and wrongly interpreted as facial details. In a demo, the researchers were able to fool facial-recognition cameras into thinking that a person was someone else (including the singer Moby) with more than 70 percent accuracy.

“Through launching this kind of attack, an attacker not only can dodge surveillance cameras; more importantly, he can impersonate his target victim and pass the face authentication system, if only the victim’s photo is acquired by the attacker,” the researchers write in a publicly available paper. “… The attack is totally unobservable by nearby people, because not only is the light invisible, but also the device we made to launch the attack is small enough. According to our study on a large data set, attackers have … [an] over 70 percent success rate for finding such an adversarial example that can be implemented by infrared.”

This isn’t the only similar example of so-called “adversarial objects” we’ve come across. Previously we’ve covered adversarial glasses that are able to render people unrecognizable to facial recognition, and a way of altering textures on a 3- printed object to make, for instance, image-recognition systems identify a 3D-printed turtle as a rifle.

While the latest China-based study hasn’t been peer-reviewed, and therefore we can’t vouch for the results, it seems that more and more examples are highlighting the potential weaknesses of computational image recognition.